Blue Brain: modelling the neocortex at the cellular level

First Deep Blue. Then Blue Gene. Now, Blue Brain.

First Deep Blue. Then Blue Gene. Now, Blue Brain.

Solve chess, next tackle the wonders of the gene, then unravel the mysteries of the brain for an encore?

Sort of. Deep Blue is now in a museum, an ultimately unsatisfying technological tour de force that accomplished little more than demonstrating that the complexity parameters of chess put it within reach of your average supercomputer.

And Deep Gene is not really designed to do anything with genes, although it’s been used to do molecular simulations. It’s cleverly named to create the image of a family of supercomputing projects, but in fact has nothing to do with Deep Blue, and at heart is a massive science fair project to see how many teraflops you get when you string together 32K nodes.

Blue Brain is the catchy name of the latest project, a partnership with a Swiss university (EPFL ) to use Blue Gene to model the human brain.

Although this project has been widely reported, most of the commentary has been at the level of calling the project a “virtual brain”, claiming for example

the hope is that the virtual brain will help shed light on some aspects of human cognition, such as perception, memory and perhaps even consciousness.

Wow, a thinking computer that’s also conscious.

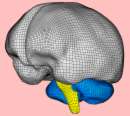

But readers of Numenware will want to understand the research plan in a bit more detail. The first project is a cellular-level model of a neocortical column. They’ll simulate 10,000 neurons and 100 million synapses (yes, there really are that many synapses on 10,000 neurons). They’re going to use 8,000 nodes, so it would seem obvious to have one node per neuron, but that doesn’t appear to be the approach. They say the simulation will run in “real time”, but shouldn’t it be able to go faster? Of course they’ll have snazzy visualization systems. Hey, can I go for a “walk” among your neocortical columns?

From there, the researchers hope to go down—and up. They’ll go down to the molecular level, and up to the level of the entire neocortex. To do the latter will require a simplified model of the neocortical columns, which they hope to be able to derive from the first project. They’ll eventually move on the subcortical parts of the brain and before you know it, your very own virtual brain.

It’s undoubtedly true that this is “one of the most ambitious research initiatives ever undertaken in the field of neuroscience,” in the words of EPFL’s Henry Markram, director of the project. But I wonder if the kind of knowledge we gather about brain functioning from this project will be the same kind of knowledge we gathered about chess from Deep Blue.

Markram has a very micro focus. For instance, he has sliced up thousands of rat brains and stained them and stimulated them and cataloged them. And this whole project has the same intensely micro focus. That’s extremely valuable, but it’s like building a supercomputer simulation of how gasoline ignites in order to understand how a car runs, when we don’t even understand the roles of the carburetor and fuel pump and combustion chamber, to borrow an overused analogy.

For instance, I’m sure Blue Brain will cast light on the mechanisms underlying memory, but when these guys say “memory” they mean synaptic plasticity. What I want to know is how I remember my beloved Shiba-ken, Wanda, who was hit and killed by a car in Kamakura.

It seems to me we don’t need supercomputers to model the brain, although I’m sure they’ll be useful; we need concepts to model. The actual model could be no bigger than Jay Forrester’s ground-breaking system dynamics model of the world’s socioeconomic system. The problem is not the technology for modeling—it’s what we model.

The same goes for neurotheology. We desperately need a computer model, but before that—we need a theory.

December 4th, 2009 at 11:19

[…] backgrounds, or, to put it another way, occur within the context of certain memories, which even Blue Brain could not model. All in all, a tough […]